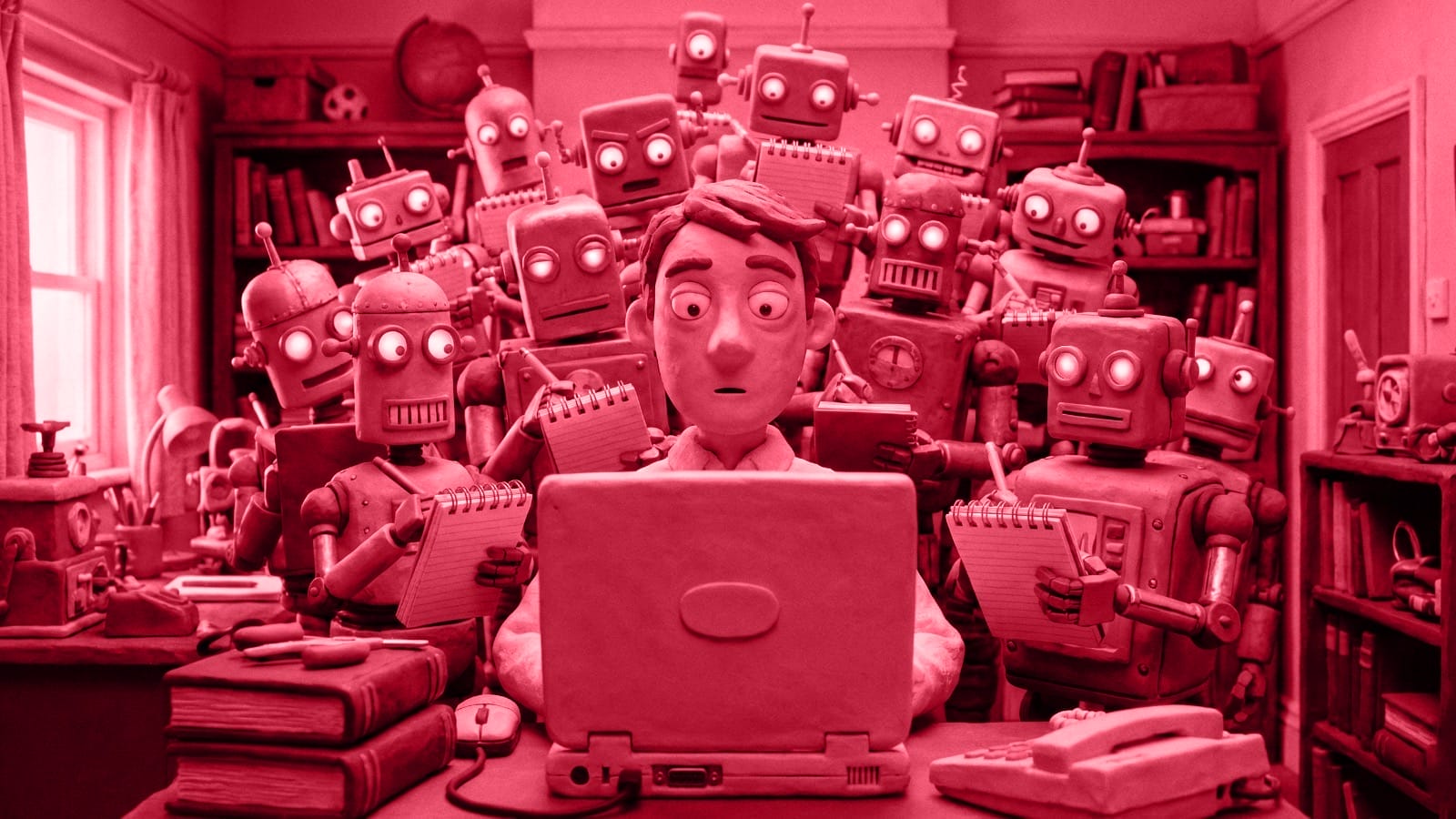

Public resistance to AI grows as adoption stalls and protests spread

Public skepticism toward artificial intelligence is deepening across the United States, even as tech companies pour billions of dollars into the technology. Protests, lawsuits, political campaigns, and union contracts are emerging as tools for people pushing back against an industry that many Americans feel is moving too fast and ignoring their concerns. A 2025 Pew …