Alibaba releases Qwen3.5, a multimodal AI model with 397 billion parameters

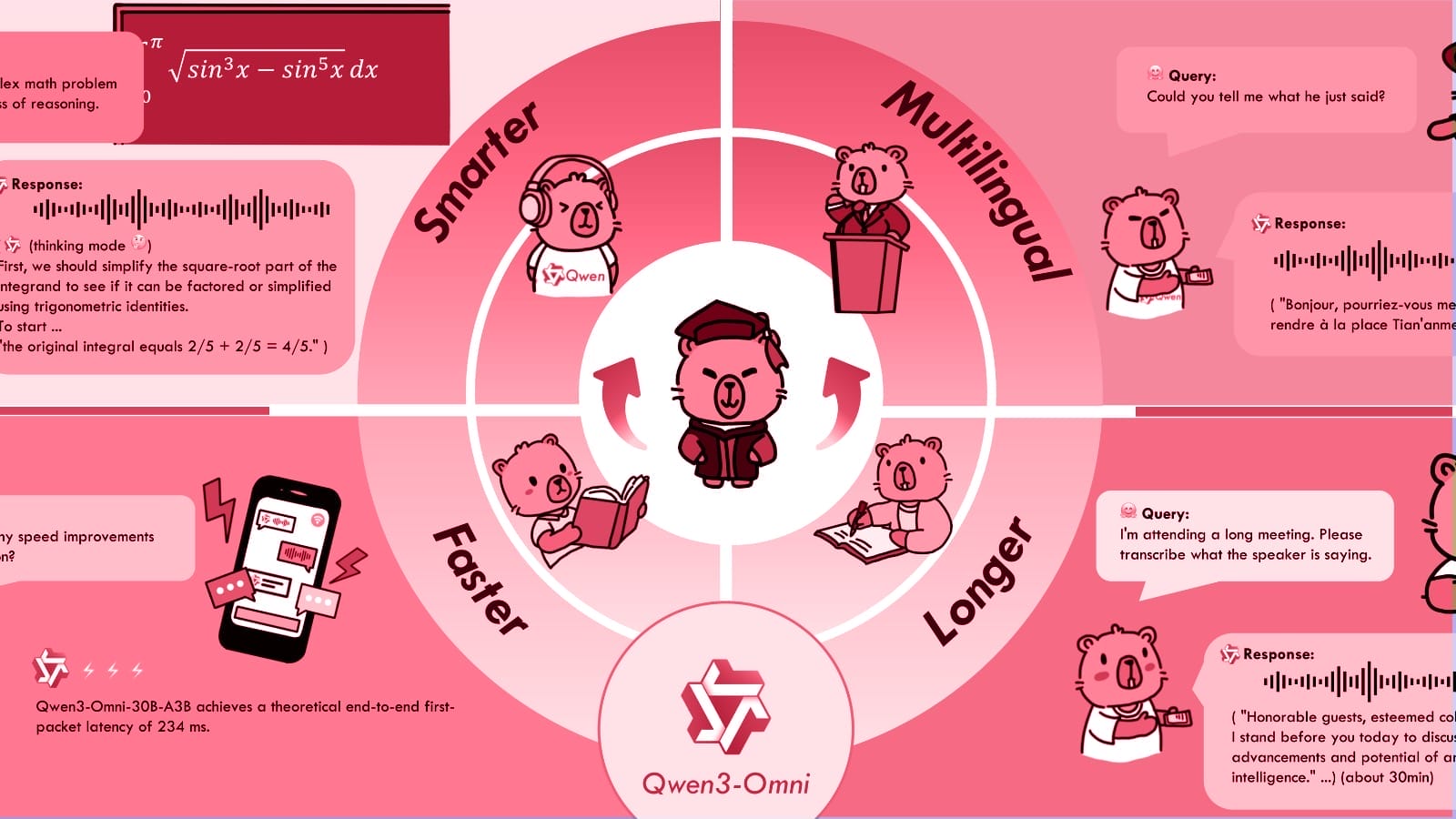

Alibaba has launched Qwen3.5, a new artificial intelligence model designed to function as a multimodal agent capable of processing text, images, and video. The QwenTeam announces this development on the company’s website. The model contains 397 billion parameters but activates only 17 billion per task, which the team says optimizes both speed and cost. This …